Help & FAQ

Learn about the data, and how to use our interactive visualizations.

Glossary

Administrative District

Administrative school districts are defined per NCES, and the schools operated by each district are identified using the NCES leaid. We use term sedaadmin to refer to administrative school districts in our datasets. Note that this is distinct from the sedalea or geographic district used in SEDA 4.1.

Understanding the Data

How can I download the data?

Check out the “SEDA2023” tab on the Get the Data page to gain access to the full dataset.

How are changes in average test scores calculated?

We construct average 2019, 2022, and 2023 math (or reading) test score estimates using state standardized math (or Reading Language Arts (RLA)) tests taken by public-school students in grades 3 through 8 in each year, and data from the NAEP. The change estimates in each subject are calculated as the difference in the average test score estimates between the two years. For example, the 2022-2023 change is calculated as the average math or reading test score in 2023 minus that in 2022.

How is free/reduced-price lunch percentage calculated and what does it mean?

The free/reduced-price lunch percentage measures the proportion of students in the school who are eligible for free or reduced-price lunches through the National School Lunch Program. Students are eligible for free or reduced-price lunches if their family income is below 185% of the poverty threshold. A school with a free lunch rate of 0% has no poor or near-poor students; the higher the free lunch rate, the greater the number of poor students. The lower the free/reduced-price lunch percentage, the more affluent the school. The data is obtained from the National Center for Education Statistics (NCES) Common Core of Data (CCD) database. We use multiple imputation to address two known issues in the free/reduced-price lunch data from the CCD: (1) the substantial missing data and (2) changes in reporting due to the community eligibility program in recent years. See the SEDA 4.1 technical documentation for more detail.

What is “percent of year in remote/hybrid learning” and how is it calculated?

Percent of year in remote or hybrid learning is a measure of the percent of the 2020-21 school year that all students received remote and/or hybrid instruction, calculated from data courtesy of the American Enterprise Institute’s Return to Learn Tracker and COVID-19 School Data Hub.

What is “ESSER funds (% of budget)” and how is it calculated?

Total ARP Elementary and Secondary School Emergency Relief (ESSER) funds are reported as a percent of the pre-pandemic district instructional budget, courtesy of Georgetown University’s Edunomics Lab. Instructional budgets obtained from the National Center for Education Statistics (NCES) Common Core of Data (CCD) database. ARP ESSER is represented; ESSER I and ESSER II allocations are not included.

Why is my district not on the map or in the data?

There are several reasons why we may not show data for a particular state or district.

Estimates for all districts in a state may be missing because:

- Sufficient data for estimation were not reported by the state.

- Fewer than 94% of students in the state participated in testing in the subject in 2019, 2022, or 2023.

- The state changed its test or proficiency thresholds between 2022 and 2023.

Estimates for an individual district may be missing because:

- Sufficient data for estimation were not reported for the district.

- The district is too small and/or has too few grades of data available to allow for the construction of reliable estimates.

- The district does not have a geographic boundary; such districts include charter districts and/or specialized local education agencies.

- Fewer than 95% of students in the district participated in testing in the subject in 2019.

- More than 20% of students in the district took alternative assessments rather than the regular tests in 2019.

For more details, see the technical documentation.

Using the Opportunity Explorer

What are the different ways of exploring the data?

The Educational Opportunity Explorer offers three different ways of looking at educational opportunity in the U.S.: a map, a chart, and a “split screen" view of both. Use the Map | Chart | Map + Chart buttons in the header to select one of these views.

What data can I choose from?

District data are available for most states; more states will be added as data become available. At the top of this Explorer, you can select from 6 Key Measures of educational opportunity to display in the map and chart:

- 2019-2022 Change in Average Math and Reading Scores, which reflect the difference in educational opportunities and experiences between cohorts of students tested in grades 3-8 in 2019 and 2022.

- 2022-2023 Change in Average Math and Reading Scores, which reflect the difference in educational opportunities and experiences between cohorts of students tested in grades 3-8 in 2022 and 2023.

- 2019-2023 Change in Average Math and Reading Scores, which reflect the difference in educational opportunities and experiences between cohorts of students tested in grades 3-8 in 2019 and 2023.

Performance is measured in grade levels, relative to the national average in grades 3-8 in 2019. Just below these buttons, you can use the left-side Data Options panel to filter by demographic (e.g., “all students"), and the type of places (states, or school districts) you’d like to display. In the Chart view, you can choose to view the entire country, or individual states.

What do the colors in the map and charts mean?

Light gray represents zero, or “no change” in average test scores.

Positive changes in average test scores are shown in green and negative changes in blue. Darker shaded circles represent larger changes.

Colorblind users: We have made efforts to ensure accessibility for the most common forms of colorblindness. For less common forms (such as tritanopia), colors may be less distinguishable; however, the data are still accessible in the map legend, charts, and other displays.

What kinds of locations can I view?

You can view administrative school district data for most states, as well as state-level estimates. To change between location types, use the Region menu in the Data Options panel at left.

How can I find a location and view its data?

You can navigate to your desired location via the navigation controls in the map. Or type a location name into the search bar in the header.

Hovering (or on a touch device, tapping) on a location in the map or chart will show an overview of that location’s data. Clicking or tapping on the location will open a Location Panel that shows a full view of all available data, as well as options for viewing other selected locations.

Clicking or tapping on locations will also add them to the Locations menu within the left-side Data Options panel. (You can add an unlimited number of locations.) Click or tap on any location’s tab to highlight it in the map or chart, and the Locations menu.

How many locations can I select at once?

You can select an unlimited number of locations at once. Selected locations will be stored in the Locations menu within the left-side Data Options menu.

How do I see all the data for a location at once?

Clicking or tapping on any location will open a Location Panel that offers a full view of the data.

How can I filter the data?

In the Data Options column displayed on the left, click Data Filters. Using the controls in this panel, you can filter what data is displayed on the map.

When multiple selections are made, the filters work together to narrow what is displayed. To return to the default view, click the “Reset data filters” button. If a filter is not available, you will not be able to select it.

What does the chart show?

The chart shows districts in the U.S. Each circle represents a district, and the circle’s size is proportionate to the number of students (e.g., a larger circle is a district with more students). On the vertical (Y) axis is the range of the selected metric in grade levels. The horizontal (X) axis shows one of three variables (you can select which you would like to show): the free and reduced lunch eligibility rate in the district, the percent of the school year spent remotely during the pandemic, or the ESSER funding received by the district.

What does the map show?

This map can be customized to show the change in math and reading test scores between 2019 and 2022, 2022 and 2023, or 2019 and 2023 for students in U.S. districts or states. The test scores represented here were collected in grades 3-8 from 2018-19 and 2022-23 at public elementary and middle schools in the U.S.

Glossary

Average Test Score

The average test score indicates how well the average student in a school, district, or county performs on standardized tests. Importantly, many factors—both early in life and when children are in school—affect test performance. As a result, the average test scores in a school, district, or county reflect the total set of educational opportunities children have had from birth through middle school, including opportunities at home, in child-care and preschool programs, and among peers. Average test scores therefore reflect a mix of school quality and out-of-school educational opportunities.

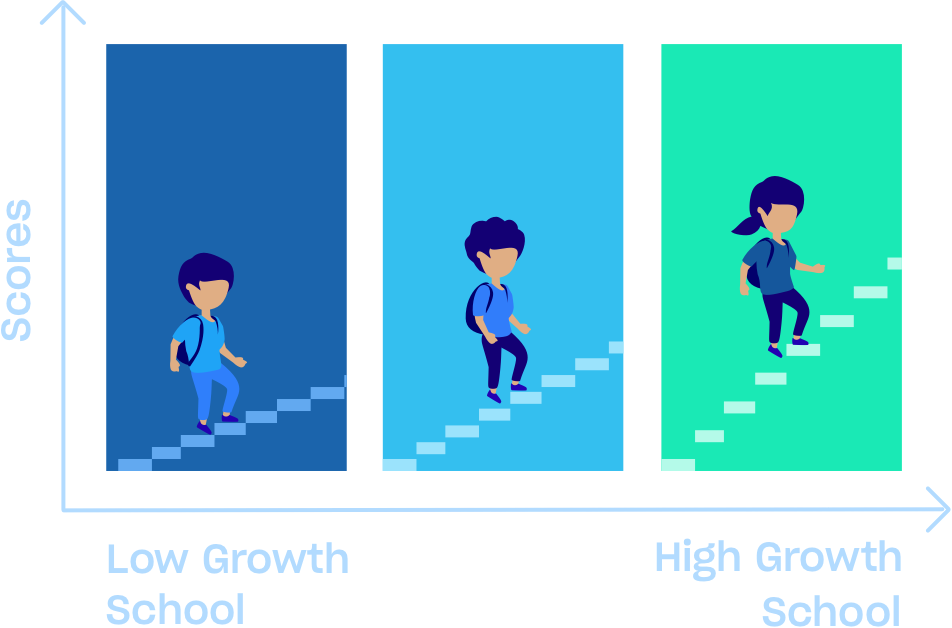

Learning Rate

The learning rate indicates approximately how much students learn in each grade in a school, district, or county. Because most educational opportunities in grades 3–8 are provided by schools, the average learning rate largely reflects school quality.

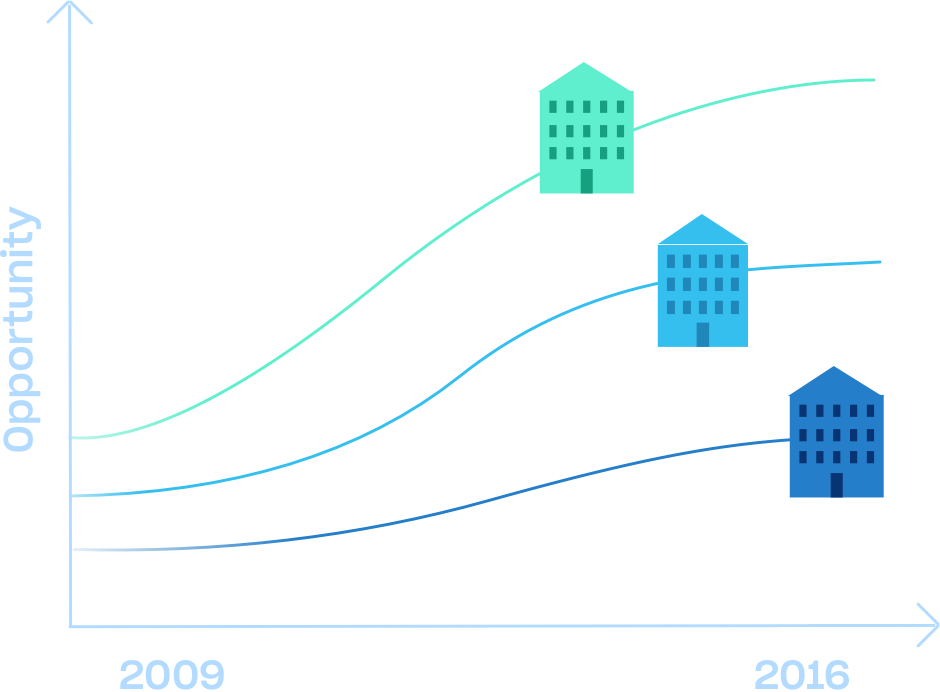

Trend in Test Scores

The trend in scores indicates how rapidly average test scores within a school, district, or county have changed over time. It reflects changes over time in the total set of educational opportunities (in and out of schools) available to children. For example, average scores might improve over time because the schools are improving and/or because more high-income families have moved into the community.

Educational Opportunity

A child’s educational opportunities include all experiences that contribute to learning the skills assessed on standardized tests. These include opportunities both in early childhood and during the schooling years, and experiences in homes, in neighborhoods, in child-care and preschool programs, with peers, and in schools.

SES

Socioeconomic status. The FAQ section describes the variables used to measure the average socioeconomic composition of families in each community, both overall and for different groups within a community.

Grade Level

As described in the Methods section, the test-score data in SEDA are adjusted so that a “4” represents the average test scores of 4th graders nationally, a “5” represents the average test scores of 5th graders nationally, and so on. One “grade level” thus corresponds to the average per-grade increase in test scores for students nationally.

Geographic School District

Most traditional public-school districts in the U.S. are defined by a geographic catchment area; the schools that fall within this boundary make up the geographically defined school district. The physical boundaries of geographic catchment areas have changed over time; we use the 2019 EDGE Elementary and Unified School District Boundaries to define geographic districts included in SEDA. Note that geographically defined school districts exclude special education schools (as identified by the Common Core of Data’s school type flag), regardless of the school’s physical location.

Data on in the Opportunity Explorer is reported for geographic school districts. Data for administrative school districts can be downloaded on the Get the Data page.

Administrative School District

Administrative school districts are defined per National Center for Education Statistics (NCES), and the schools operated by each administrative district are identified using the NCES leaid.

Data on in the Opportunity Explorer is reported for geographic school districts. Data for administrative school districts can be downloaded on the Get the Data page.

Gap in School Poverty

The gap in school poverty is a measure of school segregation. We use the proportion of students defined as “economically disadvantaged” in a school as a measure of school poverty. The Black-White gap in school poverty, for example, measures the difference between the poverty rate of the average Black student’s school and the poverty rate of the average White students’ school. When there is no segregation—when White and Black students attend the same schools, or when White and Black students’ schools have equal poverty rates—the Black-White school poverty gap is zero. A positive Black-White school poverty gap means that Black students’ schools have higher poverty rates than White students’ schools, on average. A negative Black-White school poverty gap means that White students’ schools have higher poverty rates than Black students’ schools, on average.

Gap in Percent Minority Students in Schools

The gap in percent minority students in schools is a measure of school segregation. Percent minority students in schools is measured as the proportion of minority students (Black, Hispanic, and Native American students) in a student’s school. The Black-White gap in percent minority students in schools then measures the difference between the proportion of minority students in the average Black student’s school and the proportion of minority students in the average White student’s school. When there is no segregation—when White and Black students attend the same schools, or when White and Black students’ schools have equal proportions of minority students—the Black-White gap in percent minority students is 0. A positive Black-White gap in percent minority students means that Black students’ schools have higher shares of minority students than White students’ schools, on average. A negative Black-White gap in percent minority students means that White students’ schools have higher shares of minority students than Black students’ schools, on average.

Cohort

A group of students who began school together in the same year. For example, the 2006 cohort refers to students who were first enrolled in kindergarten in the fall of 2005 (and finished in the spring of 2006). While some of these students may repeat or skip a grade during school, the majority will progress through school together in the same grades each year.

Average

The average, or “mean,” is used to represent the typical or central value in a set of numbers.

Standard Deviation

The standard deviation indicates how spread out a set of numbers is relative to the average. The standard deviation represents the typical amount by which any single number differs from the average.

Standardized

When numbers in a set have been “standardized,” they have been transformed so that the average value is exactly 0 and the standard deviation is exactly 1. After being standardized, positive numbers represent values that are above the average and negative numbers represent values that are below the average.

Achievement Test

A standardized test used to measure the knowledge and skills a student has attained in a particular subject, after instruction.

Test Score

The numeric score earned on an achievement test.

Achievement Level (or Proficiency Level or Proficiency Category)

Achievement levels indicate the extent to which student performance meets a set of prespecified standards for what should know and be able to do. Each achievement test has three to five achievement levels defined. Achievement levels often have labels describing performance relative to grade-level expectations, such as “basic,” “proficient,” or “advanced.”

BIE

Bureau of Indian Education.

Cut Score/Threshold

The test scores, or boundaries, that separate a set of achievement levels.

Proficiency Data

A data set containing information about how many students in each group (where groups could be schools, districts, states, etc.) earned scores in each achievement level by year, grade, and subject.

Proficiency Rate

The percentage of students whose test scores were at or above the “proficient” achievement level in each grade and subject.

Linking

A set of statistical methods used to facilitate comparisons across different tests.

NAEP

The National Assessment of Educational Progress; see https://nces.ed.gov/nationsreportcard/about/ for more detail.

EDFacts

A database of school proficiency data from state accountability testing housed by the National Center for Education Statistics; see https://edfacts.ed.gov/ for more detail.

HETOP

The heteroskedastic ordered probit (HETOP) model is a statistical technique that can be used to analyze proficiency data.

ECD

Economically disadvantaged students.

FRPL

Free or reduced-price-lunch-eligible students.

Understanding the Data

Where do the test score data come from? What years, grades, and subjects are used?

The data are based on the achievement tests in math and Reading Language Arts (RLA) administered annually by each state to all public-school students in grades 3–8 from 2008–09 through 2018–19. In these years, 3rd through 8th graders in U.S. public schools took roughly 500 million standardized math and RLA tests. Their scores—provided to us in aggregated form by the U.S. Department of Education—are the basis of the data reported here.

We combine information on the test scores in each school, geographic district, county, or state with information from the National Assessment of Educational Progress (NAEP; see https://nces.ed.gov/nationsreportcard/about/) to compare scores from state tests on a common national scale (see the Methods page).

We never see nor use individual test scores in this process. The raw data we receive includes only counts of students scoring at different test-score levels, not individual test scores. There is no individual or individually-identifiable information included in the raw or public data.

What are “educational opportunities”?

Educational opportunities include all experiences that help a child learn the skills assessed on achievement tests. These include opportunities both in early childhood and during the schooling years, and experiences in homes, in neighborhoods, in child-care and preschool programs, with peers, and in schools.

For more information see our interactive discovery, “Affluent Schools Are Not Always the Best Schools.".

What do average test scores tell us?

To understand the role of educational opportunities in shaping average test-score patterns, it is necessary to distinguish between individual scores and average scores in a given school, geographic district, county, or state.

Differences in two students’ individual test scores at a given age reflect both differences in their individual characteristics and abilities and differences in the educational opportunities they have had. However, because the average innate abilities of students born in one community do not differ from those born in another place, any difference in average test scores must reflect differences in the educational opportunities available in the two communities.

Why are there three different summaries of test scores (average scores, learning rates, and trends in scores) in each place? What can we learn from each of these?

The three scores tell different stories.

-

Average test score: The average test score indicates how well the average student in a school, district, or county performs on standardized tests. Importantly, many factors—both early in life and when children are in school—affect test performance. As a result, the average test scores in a school, district, county, or state reflect the total set of educational opportunities children have had from birth through middle school, including opportunities at home, in child-care and preschool programs, and among peers. Average test scores therefore reflect a mix of school quality and out-of-school educational opportunities.

-

Learning rate: The learning rate indicates approximately how much students learn in each grade in a school, district, county, or state. Because most educational opportunities in grades 3–8 are provided by schools, the average learning rate largely reflects school quality.

-

Trend in test scores: The trend in scores indicates how rapidly average test scores within a school, district, county, or state have changed over time. It reflects changes over time in the total set of educational opportunities (in and out of schools) available to children. For example, average scores might improve over time because the schools are improving and/or because more high-income families have moved into the community.

For more information on how the average, learning rate, and trend in test scores are computed, see the Methods page.

What estimates are shown on the website?

We report average test scores, learning rates, and trends in average test scores for schools, geographic districts, counties, and states in our Opportunity Explorer. To access data for the other units (e.g., administrative districts, commuting zones, and metropolitan statistical areas) or other types of estimates (e.g., estimates separately by subject, grade, and year), please visit our Get the Data page.

What schools are included in the data?

All public schools—traditional, charter, and magnet schools—that serve students in any grade from 3 through 8 are included in the data. Schools that enroll both high school students and students in some of grades 3–8 are included, but the reported test-score measures are based only on the scores of students in grades 3–8. For example, schools serving grades 7–12 are included in the data, but the test scores we use are only those from students in grades 7–8.

Schools run by the Bureau of Indian Education (BIE) are included in the data. Specifically, we include estimates based on reading and math tests taken by students in BIE schools in the years 2008-09 through 2011-12, and in 2015-16 and 2016-17. The BIE did not report test score data for other years to EDFacts.

In most states, students in private schools do not take the annual state accountability tests that we use to build SEDA. Therefore, no test scores from private schools are included in the raw or public data.

We have some special inclusion rules for the following school types:

- Charter schools: Estimates for charter schools are included in the school-level data files and on the website. Geographic district and county estimates include data for charter schools; however, charter schools are assigned to the geographic district or county in which they are physically located. In other words, a charter school may be counted as part of a geographic district even if that district does not operate the school.

- Special education schools: Estimates for special education schools are included in the school-level data files and on the website; however, data for special education schools are not included in the geographic district or county estimates.

- Virtual schools: Estimates for virtual schools are not shown on the website but are available for download in the school-level data files. Virtual school data are not included in geographic district or county estimates.

A data file indicating which schools are counted as part of geographic districts, administrative districts, and counties is available on the Get the Data page.

How accurate are the data? What should I do if I find an error in the data?

We have taken several steps to ensure the accuracy of the data reported here. The statistical and psychometric methods underlying the data we report are published in peer-reviewed journals and in the technical documentation described on the Methods page.

Along with each estimate, we also report a margin of error equal to a 95% confidence interval. For example, a margin of error of +/- .1 indicates that we have 95% confidence that the true value is within +/- .1 of the estimated value. Large margins of error signal that we are not confident in the estimate reported on the site.

Nonetheless, there may still be errors in the data files that we have not yet identified. If you believe you have found an error in the data, we would appreciate knowing about it. Please contact our SEDA support team at sedasupport@stanford.edu. (For messages about the Segregation Explorer, please email segxsupport@stanford.edu. )

Are these data available for researchers or others to use?

Yes. These data are available for researchers to use under the terms of our data use agreement. You can download the data files and full technical documentation on our Get the Data page.

Are the test-score measures (average scores, learning rates, and trends) adjusted to take into account differences in student demographic characteristics or any other student or school variables?

No. The measures of average test scores, average learning rates, and average test-score trends are based solely on test-score data.

On the website, we flag schools that serve exceptional student populations (large proportions of students with disabilities, students enrolled in gifted/talented programs, or students with limited English proficiency). These students’ characteristics should be taken into consideration when interpreting the test-score data and comparing performance to that of public schools serving more general populations.

-

Special education schools or schools with a high percentage of students with disabilities: We flag schools that are explicitly identified as special education schools by the Common Core of Data (CCD) or the Civil Rights Data Collection (CRDC). We also flag schools where more than 40% of students are identified as having a disability in the CRDC data. Students with disabilities are identified per the Individuals with Disabilities Education Act (IDEA).

-

Schools with a high percentage of students in gifted/talented programs or with selective admissions: We flag schools where more than 40% of the students are enrolled in a gifted/talented program according to the CRDC data. We also flag some schools with selective-admission policies (schools where students must pass a test to be admitted), but we do not have a comprehensive list of such schools, so not all selective-admissions schools are yet identified in our data.

-

Schools with a high percentage of limited English-proficient students: We flag schools where more than 50% of the students are identified as limited English proficient (LEP) in the CRDC data. LEP students are classified by state definitions based on Title IX of the Elementary and Secondary Education Act (ESEA).

The downloadable SEDA data files include student, community, school, and district characteristics that researchers and others can use.

How is socioeconomic status measured?

For each geographic district or county, we use data from the Census Bureau’s American Community Survey (ACS) to create estimates of the average socioeconomic status (SES) of families. Every year, the ACS surveys families in each community in the U.S. We use six community characteristics reported in 5-year rolling surveys from 2005-2009 through 2015-2019 to construct a composite measure of SES in each community:

- Median income

- Percentage of adults age 25 and older with a bachelor’s degree or higher

- Poverty rate among households with children age 5–17

- Percentage of households receiving benefits from the Supplemental Nutrition Assistance Program (SNAP)

- Percentage of households headed by single mothers

- Employment rate for adults age 25–64

The composite SES measure is standardized so that a value of 0 represents the SES of the average school district in the U.S. Approximately two-thirds of districts have SES values between -1 and +1, and approximately 95% have SES values between -2 and +2 (so values larger than 2 or smaller than -2 represent communities with very high or very low average socioeconomic status, respectively). In some places we cannot calculate a reliable measure of socioeconomic status, because the ACS samples are too small; in these cases, no value for SES is reported. For more detailed information, please see the technical documentation.

What does the gap in school poverty measure?

The gap in school poverty (shown in the secondary gap charts) is a measure of school segregation. We use the proportion of students defined as “economically disadvantaged” in a school as a measure of school poverty. The Black-White gap in school poverty, for example, measures the difference between the poverty rate of the average Black student’s school and the poverty rate of the average White student’s school. When there is no segregation—when White and Black students attend the same schools, or when White and Black students’ schools have equal poverty rates—the Black-White school-poverty gap is 0. A positive Black-White school-poverty gap means that Black students’ schools have higher poverty rates than White students’ schools, on average. A negative Black-White school-poverty gap means that White students’ schools have higher poverty rates than Black students’ schools, on average.

What does the gap in percent minority students in schools measure?

The gap in percent minority students in schools is a measure of school racial segregation. We calculate the Black-White, Hispanic-White, Native American-White, and Asian-White gaps in percent minority students in schools as (1) the percent of minority students in the average Black, Hispanic, Native American, or Asian students' school minus (2) the percent of minority students in the average White student’s school within a given district, county, or state. When this gap is zero, students in both the racial/ethnic groups attend the same schools or have equal proportions of minority students on average (no racial segregation). A positive gap indicates that there are more minority students in the average Black, Hispanic, Native American, or Asian student’s school compared with the average White student’s school. A negative gap indicates the opposite.

For these calculations, we define the percent of minority students as the percent of students in a school who are Black, Hispanic, or Native American. On average, these racial/ethnic groups have had limited educational opportunities due to low socioeconomic resources, historical societal discrimination, and the structure of American schooling; these limited educational opportunities have led to low average achievement on standardized tests. We do not include Asian students in the definition of this measure because Asian students tend to have higher socioeconomic status than White and other racial/ethnic groups; that is, Asian families are not, on average, socioeconomically disadvantaged relative to White families in the U.S.

There are multiple limitations to this definition of minority. First, we recognize that by grouping Black, Hispanic, and Native American students, we are not able to observe differences in average educational experiences of students belonging to the different racial/ethnic groups. Second, the exclusion of Asian students from this measure based on their average performance does not account for the diversity of the Asian subpopulations. Moreover, it is important to acknowledge that Asian families have been discriminated against socially and economically within the U.S., and this discrimination likely affects their educational experiences.

For SEDA, we report measures of differences in minority composition because this has long been the conventional way of thinking about segregation. However, our data shows that this measure is not predictive of unequal opportunity once we take into account differential school poverty composition.

Why are the data here different from the results reported by my state?

SEDA results may differ from publicly reported state test scores.

States typically report test scores in terms of percentages of proficient students. They may also report data only for a single year and grade or may average the percentages of proficient students across grades. Measures based on the percentage of proficiency are generally not comparable across states, grades, or test subjects, and are often not comparable across years because of differences in the tests administered and differences in states’ definitions of “proficiency.” See the Methods page for more details.

States often rank their schools or provide summary ratings for schools. These may take a number of factors into account, not just test scores. This makes them very difficult to compare across states and grades and over time.

In contrast, the test-score measures we report here are based on more detailed information about students’ test scores. They are adjusted to account for differences in tests and proficiency standards across states, years, and grades.

Why are there no data for my school, district, or county in the Explorer?

There are several reasons that data explorer may not display data for a school, geographic district, or county:

- The unit is too small and/or has too few grades to allow for an accurate estimate.

- More than 20% of students in the unit took alternative assessments rather than the regular tests.

- Data for the unit were not reported to the National Center for Education Statistics.

For more details, please see our Methods page.

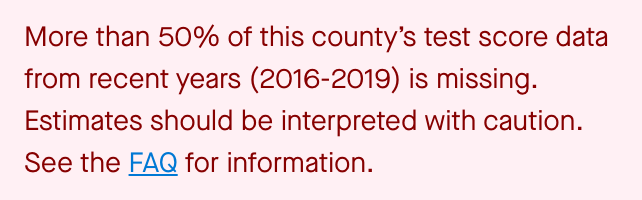

Why is my school or district flagged as missing data for recent years (2016-2019)?

If a school or geographic district is flagged (see image below) as missing more than 50% of test score data for recent years (2016-2019), this means that estimates are based on older data (before 2016). Why? Less data were reported between 2016-2019 due to state test opt out which began in the Spring of 2015. Reasons for opt out vary and the number of parents opting students out of taking state assessments also vary year-to-year. To learn more about opt out policies for students in your area, we recommend that you visit your local school, district, or state website.

Whom should I contact with additional questions?

For questions about SEDA, please contact sedasupport@stanford.edu. For questions about the Segregation Explorer please email segxsupport@stanford.edu.

How can I learn more?

The Discoveries pages provide guidance on interpreting the data, the Glossary section and Methods page provide an overview of the process used to construct these data.

Using the Opportunity Explorer

How can I get help using the Educational Opportunity Explorer?

The Educational Opportunity Explorer offers a FAQ within the Explorer itself. Press the button to get answers to your questions on using the Explorer and interpreting its map and charts.

If your questions are not answered there, please reach out to help@edopportunity.org for questions on how to use the Educational Opportunity Explorer and website.